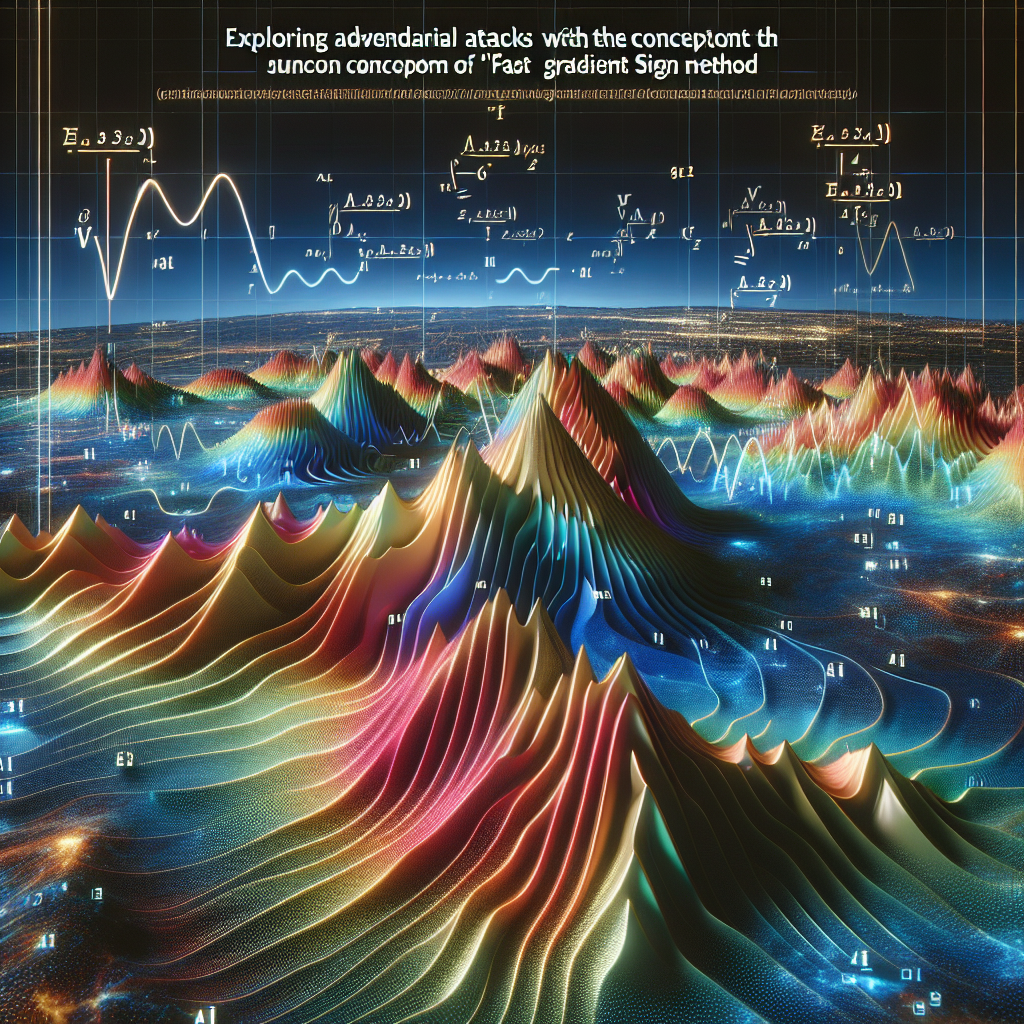

Exploring Adversarial Attacks with Fast Gradient Sign Method

Exploring Adversarial Attacks with Fast Gradient Sign Method

Researchers have been investigating the Fast Gradient Sign Method (FGSM) to understand and counter adversarial attacks on machine learning models. Adversarial attacks involve manipulating input data to deceive machine learning algorithms and cause them to make incorrect predictions. The FGSM is a popular technique used to generate these adversarial examples.

Understanding Adversarial Attacks

Adversarial attacks are a growing concern in the field of machine learning. By making small, imperceptible changes to input data, attackers can trick machine learning models into misclassifying or producing incorrect outputs. This poses a significant threat in various domains, including autonomous vehicles, cybersecurity, and healthcare.

The Fast Gradient Sign Method (FGSM)

The Fast Gradient Sign Method (FGSM) is a technique used to generate adversarial examples. It works by calculating the gradient of the loss function with respect to the input data and then perturbing the input data in the direction that maximizes the loss. This perturbation is typically small enough to be imperceptible to humans but can significantly impact the model’s predictions.

Exploring FGSM’s Effectiveness

Researchers have been exploring the effectiveness of the FGSM in generating adversarial examples and evaluating the vulnerability of machine learning models. By applying the FGSM to different models and datasets, they can assess the robustness of these models against adversarial attacks.

- Researchers have found that the FGSM can successfully generate adversarial examples that fool machine learning models.

- They have also discovered that the effectiveness of the FGSM varies depending on the model architecture and dataset.

- Some models are more vulnerable to FGSM attacks, while others exhibit greater resilience.

Countering Adversarial Attacks

Understanding the FGSM and its impact on machine learning models is crucial for developing effective defenses against adversarial attacks. Researchers are exploring various techniques to enhance model robustness, such as adversarial training, defensive distillation, and input preprocessing.

Summary

The Fast Gradient Sign Method (FGSM) is a technique used to generate adversarial examples and test the vulnerability of machine learning models. Researchers have found that the FGSM can successfully generate adversarial examples, highlighting the need for robust defenses against adversarial attacks. By understanding the FGSM’s effectiveness and exploring countermeasures, we can enhance the security and reliability of machine learning models in various domains.